CData Software Acquires Data Virtuality to Modernize Data Virtualization for the Enterprise

Data Virtuality brings enterprise data virtualization capabilities to CData, delivering highly-performant access to live data at any scale.

Explore how you can use the Data Virtuality Platform in different scenarios.

Learn more about the Data Virtuality Platform or to make your work with Data Virtuality even more successful.

Insights on product updates, useful guides and further informative articles.

Find insightful webinars, whitepapers and ebooks in our resource library.

Stronger together. Learn more about our partner programs.

Read, watch and learn what our customers achieved with Data Virtuality.

In our documentation you will find everything to know about our products

Read the answers to frequently asked questions about Data Virtuality.

In this on-demand webinar, we look at how a modern data architecture can help data scientists to be faster and to work more efficiently.

Learn more about us as a company, our strong partner ecosystem and our career opportunities.

How we achieve data security and transparency

Most companies these days are now operating on a distributed, multi-cloud, hybrid computing environment that spans on-premises, multiple clouds, SaaS applications and the edge. Therefore, their data is increasingly distributed across this environment in multiple different types of data stores. This includes relational databases, NoSQL databases, cloud storage, file systems, SaaS application databases, streaming data platforms, edge databases, and more.

Some of these data stores are associated with transaction systems and some are associated with analytical systems like data lakes, lakehouses, data warehouses, data marts, and graph databases. Then there are content stores holding documents, video, images, and audio. Also, new data sources continue to emerge inside and outside the enterprise almost on a daily basis.

Given this backdrop and with so much new data available, there has been a steep rise in the number of people wanting to engineer and integrate data from multiple different data sources to make it available for analysis. For example, ETL developers that clean and integrate raw data from transaction systems for use in a data warehouse.

Also, data scientists want to clean and integrate raw data from streaming data sources, transaction systems, external data sources, content stores and also analytical systems to provide data to train new machine learning models. Another example would be people wanting to clean and integrate data to populate a graph database to make data available for fraud analysis. These people are typically referred to as data producers and more of them are emerging across enterprises as the data literacy improves.

In addition, there are data consumers like business analysts that want to analyse data and produce insights in reports and dashboards to support decision making. The problem, however, is often that for many consumers, the data they need is not all in one location.

Most of what they need is already engineered and available for access in an analytical data store (e.g., in a data mart), but it is frequently the case that some is not. That additional data may be in another data mart, still in a source transaction system, or perhaps a new external data source has emerged that could be extraordinarily valuable, but the data is not yet in the data mart. So, like it or not, the data consumer has no choice but to try to figure out how to integrate data just to be able to analyse it and create the insights they need.

In a sense, this is forcing complexity on the consumer to have to connect to multiple data sources and integrate data first with self-service data preparation tools before they can do what they really want to do which is analysis and reporting to create new insights.

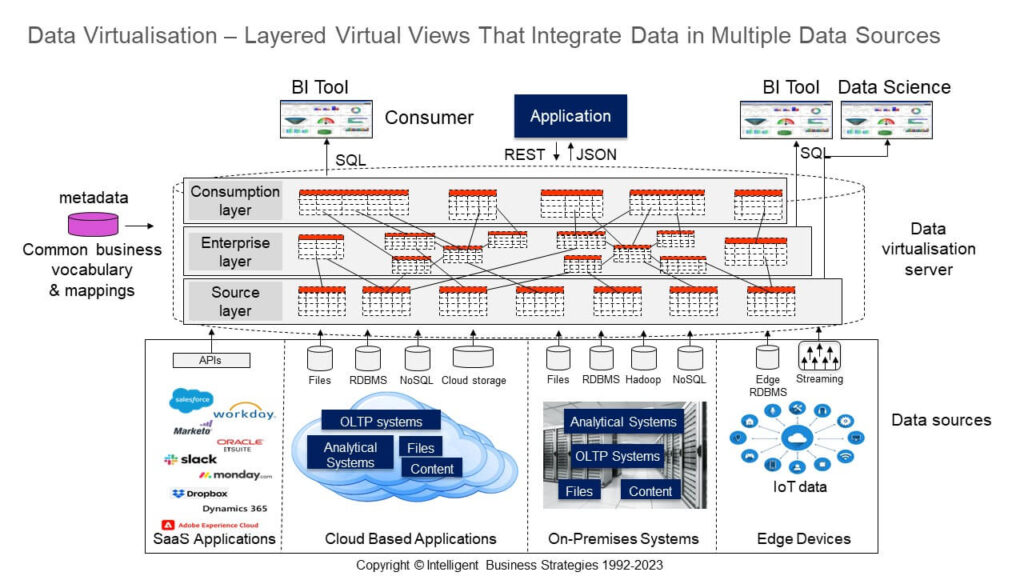

There are, of course, a number of ways to integrate data for use in analytics. One of the most agile ways is to use data virtualisation which allows you to define virtual views consisting of integrated data from one or more data sources that can be queried using SQL like relational database tables. This is shown in Figure 1. Note that virtual views in a data virtualisation server can be layered on top of each other to provide additional transformation power while also simplifying access to data and shielding users from structural changes in underlying data sources.

Figure 1

Data virtualisation integrates data on-demand without the need to copy it and is not new. It’s been around for many years and there are thousands of companies out there using this technology today to simplify access to data that resides across multiple data stores. This includes the data in the different types of aforementioned data stores be they on-premises, on one or more clouds or at the edge. But let’s be clear about one thing. The objective of data virtualisation is not to replace a data warehouse, or a lakehouse, but it does enable you to access data in multiple data stores (which could include a data warehouse, one or more data marts, etc.), without the need to copy it. That can be very valuable to both the data producers and data consumers.

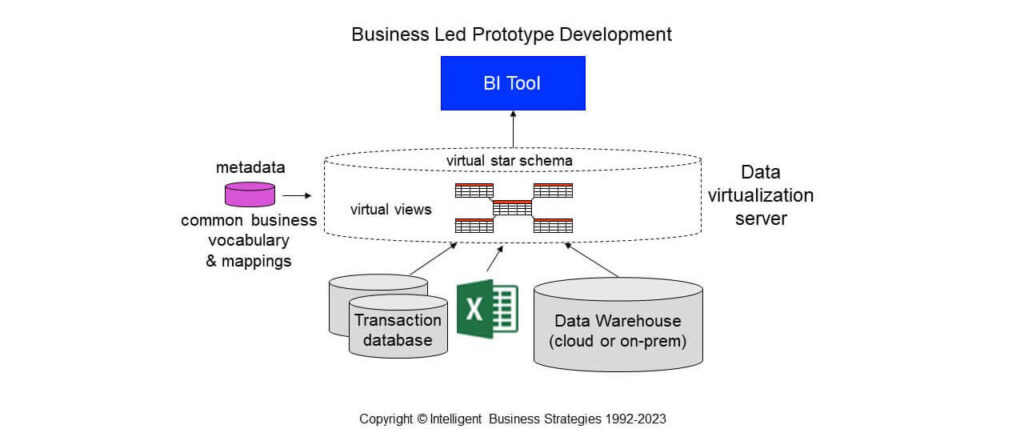

In the case of a data producer, data virtualisation enables them to leave data where it is, which may be important for compliance reasons attached to data sovereignty. It also encourages early prototyping. For example, Figure 2 shows a very simple example where the data needed by the business is not all in a data warehouse. There is other data in a transaction system and an Excel spreadsheet that is not yet in a data warehouse.

Figure 2

In this case, there is no need to wait for design changes to the data warehouse and new ETL jobs to be built which is difficult to do when a consumer doesn’t know exactly what they want. Instead, a data producer can work with business colleagues and use data virtualisation to integrate data in a data warehouse with data in other sources not yet available in a data warehouse, without having to copy it and then make it available to a consumer via virtual views.

The user then accessed the virtual views as if the data was integrated to enable them to produce new insights using self-service BI. It enables a prototype virtual data model (e.g., a star schema) to be quickly created in a data virtualisation server while hiding the complexity of multiple data stores from the user.

You can also use common business data names in virtual views to create a common semantic layer for all tools to use and hide physical data names in underlying data stores. As business user requirements unfold, changes can be easily made on a frequent basis to the virtual data model and to the data mappings metadata in the data virtualisation server while leaving data where it is. This enables rapid prototyping until such time as the business user is happy.

The point about this is that it’s flexible, agile development. Business professionals are involved in the design and prototyping at an early stage, they can quickly start producing the insights they need using self-service BI and you don’t have to wait to make changes to a data warehouse. You can then bring the data into the data warehouse later.

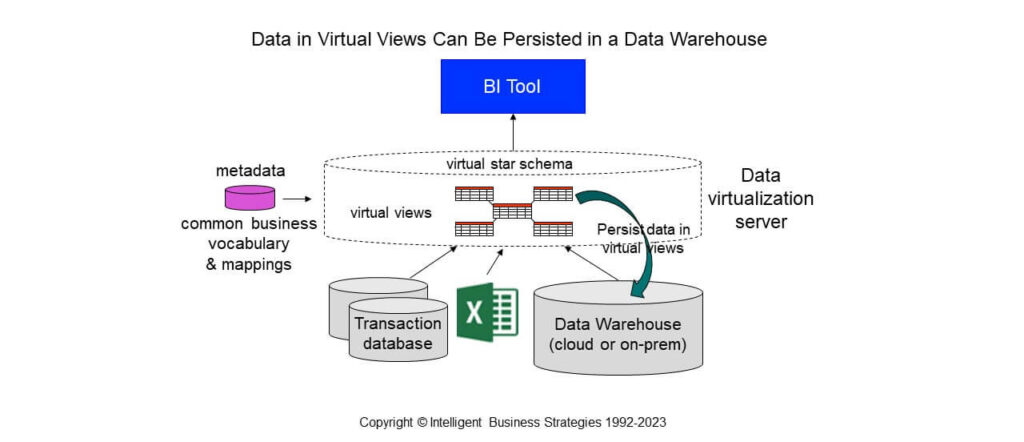

You might think that bringing the data into a data warehouse at a later date would mean you would have to rebuild the data engineering pipeline again in a separate ETL tool because there is no standard to port the data transformation and integration mapping metadata from data virtualisation to another vendor ETL tool. Although it is true that there is no standard, what many people don’t realise is that data virtualisation software is capable of ETL and can also persist virtual views in your data warehouse e.g., in AWS Redshift or Snowflake. This is shown in Figure 3. You don’t need to rebuild the data engineering pipeline in another tool. Furthermore, this can be scheduled to happen on a frequent basis.

Figure 3

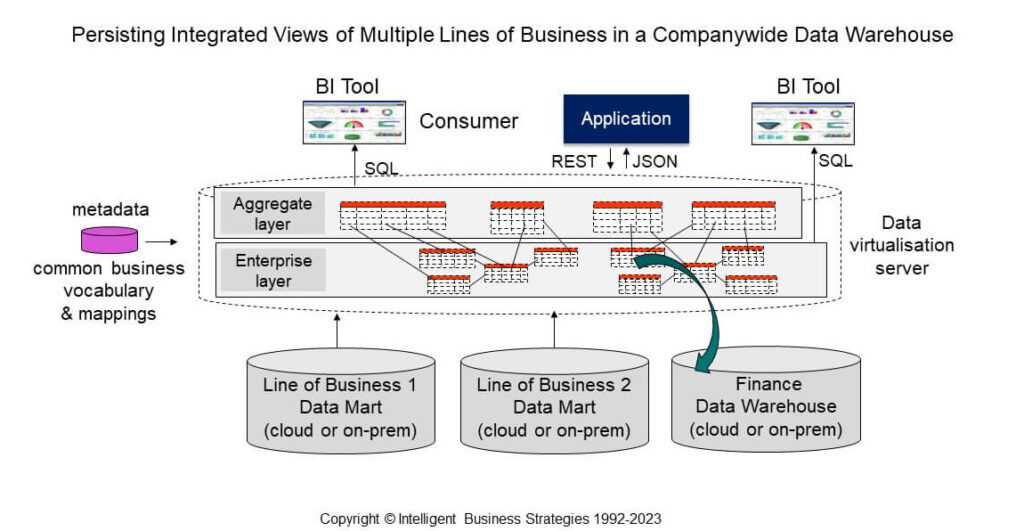

What’s more is the same can apply to virtual views created to aggregate data and simplify access to multiple analytical systems by business users. For example, Figure 4 shows virtual views of data that has been integrated from multiple different line of business data marts to provide a companywide view of overall business performance. Further aggregation views have been built on top of the company wide views. You can choose to persist higher value or frequently accessed aggregate virtual views that join data from the multiple data marts into a finance data warehouse for example thereby extending the data warehouse and making the data available there.

Figure 4

This ability to persist data in virtual views of integrated data across multiple data stores is like materialising views and benefits both data producers and business user consumers, providing more agility.

It also shows that data virtualisation software is now transitioning beyond data virtualisation to more of a data fabric capability offering customers multiple forms of data integration – the ability to build data engineering pipelines that transform, integrate, and persist data as well as data pipelines that transform and integrate data on-demand. This means agility, early prototyping, and progress towards persisting data is possible in one platform. It also allows the same platform to offer different data integrations options that can be optimised for different business use cases.

Watch the Recorded Webinar with Mike Ferguson, CEO of Intelligent Business Strategies, originally aired on September 26! Dive into the complexities of current market trends, including data fabric and data mesh, and discover how AI-enhanced tools are streamlining data integration in today’s fast-paced environment.

Don’t miss this chance to gain invaluable insights and empower your organization for a data-driven future.

About the author

Mike Ferguson, an accomplished independent analyst, and thought leader excels in Business Analytics, ML, Big Data, Data Management, and Intelligent Business. Recognized for his expertise in integrating BI/ML/AI to achieve real-time business optimization. As chairman of Big Data LDN and a member of EDM Council CDMC Executive Advisory Board, he’s a prominent figure in the industry.

Data Virtuality brings enterprise data virtualization capabilities to CData, delivering highly-performant access to live data at any scale.

Discover how integrating data warehouse automation with data virtualization can lead to better managed and optimized data workflows.

Discover how our ChatGPT powered SQL AI Assistant can help Data Virtuality users boost their performance when working with data.

While caching offers certain advantages, it’s not a one-size-fits-all solution. To comprehensively meet business requirements, combining data virtualization with replication is key.

Explore the potential of Data Virtuality’s connector for Databricks, enhancing your data lakehouse experience with flexible integration.

Generative AI is an exciting new technology which is helping to democratise and accelerate data management tasks including data engineering.

Leipzig

Katharinenstrasse 15 | 04109 | Germany

Munich

Trimburgstraße 2 | 81249 | Germany

San Francisco

2261 Market Street #4788 | CA 94114 | USA

Follow Us on Social Media

Our mission is to enable businesses to leverage the full potential of their data by providing a single source of truth platform to connect and manage all data.